This report contains corrections.

Introduction and summary

The tenor of news coverage about higher education’s gatekeepers for federal student aid shifted notably in 2016. The private agencies—known as accreditors—review colleges to assure their quality and have often been portrayed as lax and derelict in their duty. Some media outlets, for example, have referred to these agencies in headlines such as “The Watchdogs of College Education Rarely Bite” and “College Accreditors Need Higher Standards.”1 But the media coverage around accreditors suddenly shifted, as evidenced by headlines such as “Accreditors Crack Down”2 and “Tougher Scrutiny for Colleges with Low Graduation Rates.”3

The good press coverage followed a new effort announced by the Council of Regional Accrediting Commissions (C-RAC)—an informal group of seven accreditors that oversees colleges in defined geographic regions. The group announced a plan to take a closer look at institutions with low graduation rates in late 2016. Under the plan, C-RAC members conducted an in-depth review of four-year colleges with graduation rates at or below 25 percent and two-year colleges with graduation rates at or below 15 percent, which is about half the national average.4 The review looked at conditions that may explain the low graduation rates and what colleges were doing to improve, although it did not lead to any immediate sanctions on those schools.

The C-RAC announcement and resulting study were part of an effort to coordinate a response to accreditors’ critics. Such critics—including the Center for American Progress—have pointed to the large number of colleges with poor student outcomes that continue to win accreditation and rarely face significant consequences as a sign that accreditors are overly focused on process and not paying enough attention to actual results.5

When C-RAC issued its final report, it argued a different case on accreditors’ use of student outcomes. Accreditors say that they measure outcomes but must use that data in a nuanced way. There are several reasons for this: Federal data is often unreliable; institutions serve student bodies that are too diverse to be judged uniformly; and institutional quality must be considered in a broader context—not by outcome indicators alone.6

The reality of how accreditors use student outcome data actually lies somewhere in between. This report shows that the common refrain—that accreditors don’t focus on student outcomes—is only half true. Accreditors do much more than measure inputs such as the number of books in a library, which they have long been criticized for doing instead of measuring student outcomes. Most accreditors collect numerous outcome measures every year. It is unclear, however, the extent to which the data are used to hold colleges accountable for bad outcomes. As a result, accreditors may review student outcomes, but their standards lack clarity on how a school’s observed performance connects to consequences. With no definition of what performance means in terms of a college’s quality, even the lowest performers pass the bar. These primary findings cover regional accreditors; national accreditors will be addressed later in the report.

The Center for American Progress conducted a detailed investigation of accreditor policies and practices at 11 main regional and national agencies, reviewing standards, guidance, documents, and annual reports to identify to what extent accreditors hold colleges accountable for student outcomes. The analysis also looked at how standards and guidance are used in practice. For each of the seven regional accreditors, the author reviewed documents for two colleges’ accreditation reviews. Examining these documents showed what evidence colleges provide to prove that they are meeting accreditors’ standards as well as how agencies then evaluate whether or not a college is in compliance. A similar review of self-studies from nationally accredited institutions could not be completed because nationally accredited colleges do not typically make these reviews public.

Regional agencies vary in terms of what student outcomes they emphasize, but none have a clear definition for what constitutes poor performance or how observed results connect to accountability actions taken. Some agency policies require that institutions evaluate specific outcomes such as completion in their accreditation reviews. Other agencies leave it up to the institution to determine which outcome measures demonstrate the value of the education they offer. The primary focus of regional agencies, however, is not on the level of performance but rather on the process a given college has to improve its educational offerings. As a result, a school’s success is often measured by whether it has a codified process—not whether its performance is any good. Consequently, low performers may be insufficiently pushed to improve.

In contrast, the policies of national accreditors—which oversee mostly career-focused programs—place a clearer focus on accountability for student outcomes in their reviews. These agencies have minimum performance requirements for outcomes such as completion, job placement, and licensure pass rates. These measures are typically assessed annually, and often down to the individual program level. This structure, however, may not be as strong as it appears. When an institution fails to meet a benchmark, some agencies can be lenient in enforcement. Minimum performance standards may not represent high bars and vary across agencies. In practice, similar colleges are held to divergent expectations by national accreditors on how their students fare.

The good news of this analysis is that many regional and national accrediting agencies already collect some of the student outcome measures and have in place a process for collecting data needed to adopt a more results-based approach. That said, getting all accreditors to such a place demands a sizeable mentality shift to an approach that would require defining adequate performance and establishing a process of accountability across agencies. Changes to federal legislation could help encourage this shift.

To achieve a more outcomes-focused approach, this report recommends that accreditation agencies should:

- Collect common student outcome data across agencies.

- Include equity in data collection.

- Connect data collected explicitly to standards through clear performance benchmarks.

- Establish a defined process in accreditor standards for holding colleges accountable when performance on student outcome benchmarks is not up to standard.

- Support the creation of a federal student-level data system.

In addition to the actions above that accreditors should take, Congress should:

- Require accreditors to have standards on defined student outcomes.

- Create repayment rate and default minimums for federal aid purposes.

Requiring accreditors to focus more on outcomes matters for students. Each year, the U.S. Department of Education provides nearly $130 billion in taxpayer money in the form of student grants and loans to help 13 million students attend more than 6,000 colleges.7 Many of these students, however, will never graduate. Students who do not finish are significantly more likely to default on their student loans, often with catastrophic long-term financial impacts.8 To make matters worse, the higher education system suffers from broad gaps in college attainment and disparities in student loan debt and default by race and income.9 These differences define a system that too often exacerbates inequity instead of serving as an engine for economic mobility. It is all too easy for a college whose students don’t fare well to receive an accreditor’s stamp of approval—and the federal money that comes with it—over and over again, even when student outcomes don’t improve.

While C-RAC’s review of graduation rates is a great first step to encouraging higher accreditor standards, it will not amount to accountability unless it goes beyond simply reviewing institutions. Instead, accreditors must implement a set policy and process to address low performance on student outcomes going forward.

Background

Accrediting agencies are nonprofit, independent membership associations that serve as gatekeepers to federal student aid dollars. Before students can access federal grants and loans to pay for college, their college must first gain approval from a federally recognized accrediting agency.

The role of accreditation

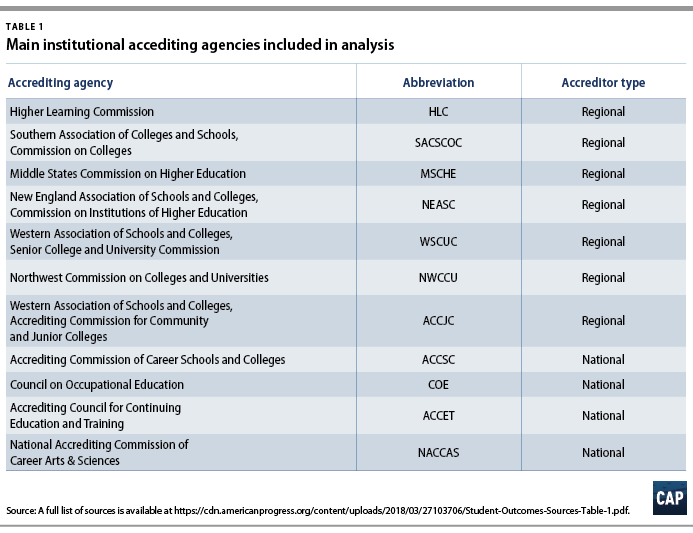

The U.S. Department of Education recognizes 36 accrediting agencies of three different types.10 The first group consists of seven main regional accreditors that oversee schools based on their geographic location. For example, the Southern Association of Colleges and Schools Commission on Colleges (SACSCOC) oversees colleges in the southern states.11 The majority of colleges overseen by regional accreditors are public and private nonprofit colleges. The second group consists of national accrediting agencies that oversee colleges nationwide, most of which are private for-profit colleges. The third group—which is not detailed in this report—consists of programmatic accreditors that oversee specific programs but are not typically the primary gatekeepers to federal financial aid.

To gain approval from an accrediting agency, colleges must prove that they are in compliance with their agency’s standards at least once every 10 years—and timeframes at national accreditors are often shorter. A grant of accreditation after the review process should signal that a college is high quality.

An accreditor must abide by several federal requirements to serve as a gatekeeper to federal financial aid dollars. Under federal law, accrediting agencies are required to have standards on student achievement in addition to other categories such as faculty and finances.12 Accreditors set their own standards on quality that colleges must meet in order to be accredited. Standards, as used here, are the framework an accreditor uses to evaluate a college.

While accreditors are required to examine student achievement, federal rules give accreditors substantial flexibility to decide how they do so. First, federal legislation does not specifically define student achievement, leaving it open to interpretation by both accreditors and schools, the latter of which can define what constitutes success based on their institutional mission. Second, the Higher Education Act states that accreditors should have standards that address:

Success with respect to student achievement in relation to the institution’s mission, which may include different standards for different institutions or programs, as established by the institution, including, as appropriate, consideration of [s]tate licensing examinations, consideration of course completion, and job placement rates.13

Third, each accreditor sets its own standards on quality, which can vary significantly by agency.

Though the requirements outlined in law do not define student outcomes standards, they also do not restrict agencies from setting and creating their own stricter accreditation standards for their institutions.

The accreditation process

The most intensive step in the accreditation review process is a self-study.14 A self-study is a report prepared by a college that provides evidence that it meets each of its accreditor’s standards. A self-study process often takes an average of 18–24 months, though it can be shorter at nationally accredited schools. After a college submits its self-study, an accreditor arranges a team of volunteer peer-reviewers for a campus site visit. During the visit, the team evaluates the college to determine if that it meets each standard. Following the visit, the team files a report that details where the college meets standards, where it falls short, and how it should improve. The institution then has a chance to respond to that report. The ultimate decision on what happens to a school rests with an accreditor’s commissioners. An agency’s commissioners—including faculty, administrators, practitioners, and some members of the public—serve as the official decision-making body and determine whether or not a college gains accreditation.15 The accreditor can also take actions that range from requiring a college to file another report; undergoing a visit in a given amount of time; or taking punitive action, such as probation. While punitive actions can signal that a college is at risk of losing accreditation, very few colleges actually do.16

In addition to the formal reaccreditation process that occurs at least once every 10 years, accrediting agencies also monitor college performance through annual reports, midterm reviews, and interim monitoring and special visits as needed. Annual reports vary but typically require colleges to submit basic information including data on finances and student outcomes. Some agencies require periodic review reports, but when and if these occur varies widely across agencies. As a result, periodic review reports were not included as part of this analysis.17

Key terms used in this report

Accreditors often use different terms to refer to the multiple steps in the accreditation review process. To avoid confusion, this report uses the most common terms. The following is a list of key terms used throughout this report:

- Standards: Standards are the quality framework an accreditor uses to evaluate a college. The standards define rules a college must meet in order to be accredited. Accrediting agencies create their own standards. While standards vary across agencies, federal law requires agencies to have standards in key areas such as student achievement, faculty, and finances.

- Self-study: A self-study is a report each college must prepare that explains how it meets its accreditor’s standards of quality.

- Accreditor site visit or team report: Accreditor site visits or team reports are put together by a group of volunteer peer reviewers in order to evaluate whether a college meets an accreditor’s standards. They often explain where a college performs well, where it falls short, and what it needs to do to improve. These reports are based on information provided in a college’s self-study and found during an accrediting team’s visit.

- Student outcome standard: A student outcome standard is based on quantifiable student achievement measures such as graduation rates, employment rates, and student loan defaults. Under federal legislation, accreditors are required to have standards on student achievement—which may include student outcomes, but is not specifically defined. This report is only concerned with student achievement standards that address student outcomes as defined here.

Methodology

To understand how accreditors use student outcome data in college reviews, CAP analyzed documents from the seven largest regional accrediting agencies and four of the largest national agencies. Table 1 provides a complete list of the accreditors included in this analysis as well as their respective abbreviations.

This analysis relied on a wide range of documents. The author examined accreditor standards to determine how colleges are evaluated on student achievement and what requirements a college must meet to gain accreditation. This report also included a review of any publicly available guidance provided to institutions on what evidence should be included in the self-study.

Accreditors typically have broad definitions of student achievement, including student learning, graduation rates, and postgraduation placement. This report, however, only analyzes standards on quantifiable student achievement measures such as graduation rates, employment, and student loan defaults. While learning is a critical part of student achievement, it cannot be easily quantified and is complex enough to merit its own report.

Due to their broad definitions, standards can often be vague and difficult to understand without real-world context. To better grasp how standards are applied, this analysis included a review of several college self-studies as well as the accrediting site visit team reports that followed. While a site visit team report is not the final say on whether a college meets agency standards, it does heavily influence an agency’s final decision. Institutions have the opportunity to respond to the report, and the agency’s commission determines whether an institution meets each standard. It is important to note, however, that these decision processes are not public and are influenced by the findings from the site visit report.

CAP reviewed a total of 14 colleges’ self-studies and subsequent accreditor site visit team reports—two colleges for each of the seven largest regional accrediting agencies. Nationally accredited colleges do not voluntarily make accreditation documents public, therefore, a similar review of these colleges’ self-studies could not be completed. Reviewing two examples of accreditation reviews for each regional agency is by no means comprehensive of all the work an agency does, but it can provide additional context for how reviews apply standards.

Most accreditors do not require colleges to make self-studies and accreditor visit reports public. While some public and nonprofit colleges voluntarily post their self-studies and accreditation reviews on their websites, many do not. CAP was unable to find any nationally accredited for-profit college self-study or accreditation site visit team reports that had been voluntarily published. As a result, the review of self-studies relied on colleges that voluntarily post their accreditation documents online, with two exceptions. WSCUC publishes each college’s accreditation site visit team report.18 It does not publish the college’s self-study. ACCJC requires colleges to publish their self-studies, site visit team reports, and any actions taken against them on their own websites.19

Because self-studies and site visit reports are not always public, CAP applied several selection criteria to its review. CAP only reviewed colleges that published both their self-study and their corresponding site visit team report. A self-study on its own only provides information on how a college believes it meets an accreditor’s standards, not how an accreditor judges a college’s performance. This analysis also attempted to only include self-studies and reports that were conducted based on each agency’s most recent standards. This was impossible to do for some agencies due to a recent change in their standards or their lack of transparency. For example, the NEASC revised its standards in 2016.20 Of the 28 U.S. colleges that underwent reviews by NEASC in 2016 and 2017, only two colleges—Johnson State College and the University of Massachusetts, Boston—published both their self-studies and accreditor site visit reports. However, University of Massachusetts, Boston was evaluated based on NEASC’s 2011 standards. In another example, MSCHE revised its standards in 2014, but the standards only became effective for colleges undergoing review in the 2017-2018 school year.21 Because no colleges had completed a self-study or accreditation visit based on the new standards, CAP used the old standards for this portion of its analysis.22 A complete list of accreditor standards, self-studies, accreditor site visit team reports, and other relevant guidance reviewed for this report is included in the Appendix.

Additionally, CAP attempted to review self-studies from a broad range of colleges in order to include examples from a variety of institutions. The two self-studies for each regional agency, for example, typically consisted of one large and one small college; one public and one nonprofit college; or one four-year and one community college.

Lastly, CAP reviewed the instructions for annual reporting required by accreditors as well as the forms and submissions when available. Each agency requires colleges to submit annual reports consisting of a range of financial and student outcome data. These reports provide additional information about the types of evidence accrediting agencies have available to evaluate college quality. If a report was not publicly available, CAP reached out to the agency for more information.

Findings

Student outcome standards among regional accreditors vary in strength and specificity

Overall, this analysis found that the expectations regional accreditors lay out in their standards on student outcomes vary widely. Some agencies require colleges to focus on specific measures; some require goal setting on particular outcomes; and others allow institutions to decide measures that accurately reflect their success. The following table shows how student outcome standards compare across agencies. A full list of each agency’s student outcome standards is included in the Appendix.

To read the accreditor agencies’ standards in Table 2, hover over the check marks.

Five of the 7 agencies’ standards require colleges to measure and report specific student outcomes, although what measures are explicitly mentioned in the standards fluctuates from one agency to another. For example, HLC requires colleges to focus on rates of retention, persistence, and completion.23 WSCUC requires that colleges track retention and completion rates.24 Two accreditors—SACSCOC and NWCCU—leave measures of success more open-ended. SACSCOC requires that colleges evaluate success—which may include retention and graduation rates or student portfolios, among other things—but does not stipulate which specific indicators a college must choose.25 Like SACSCOC, NWCCU allows institutions to determine what measures define their success.26 MSCHE takes a different approach. While the agency specifically recommends measuring rates of retention, persistence, and completion, it does so indirectly. For example, an institution must show its commitment to these measures through its student supports or may use improvements in these measures as evidence of its effectiveness. In other words, retention, persistence, and graduation rates are indirect measures of quality.27

Five of the 7 agencies included in this analysis require colleges to set goals on student performance. ACCJC, for example, has the most detailed standards on institution-set goals and requires colleges to set their own performance expectations.28 The agency requires that institutions set performance goals on course completion rates, the number of degrees awarded, transfer rates, as well as job placement rates and licensure pass rates for every career and technical education program. Colleges are also required to set standards of performance on other measures they deem appropriate to their mission, such as graduation and retention rates. As part of colleges’ self-studies, they must explain how they set the standard, how they evaluate their previous performance, and how their performance compares to their goal. ACCJC’s method presents an alternative to national accreditors’ minimum performance standards. Rather than set one bar all institutions must meet, the agency takes an individualized approach that requires each college to set its own benchmarks for acceptable performance.

Unlike other regional agencies’, ACCJC’s standards exemplify institutional flexibility that does not come at the expense of accountability. However, goal setting also has an inherent tension. On the one hand, goal setting ensures that colleges strive for improvement. On the other hand, even colleges with the worst performance get by without requiring further action. Overall, goal setting allows institutions to determine what level of success is appropriate based on their own unique contexts. While accreditors evaluate institutions’ goals, they never define a minimum bar of performance that is acceptable for all institutions. In practice, some of these goals act more like minimum performance thresholds rather than goals to strive toward. At MiraCosta College, for example, some institution-set standards are below actual achievement.29 A system that requires colleges to choose their own performance categories also makes it harder to compare performance consistently across colleges.

Only 2 of the 7 regional accrediting agencies have standards that are focused on equity in outcomes. Both WSCUC and ACCJC require institutions to disaggregate and analyze performance data by demographic categories.30 WSCUC requires institutions to disaggregate student data by demographic categories and areas of study, and ACCJC requires institutions to disaggregate and analyze learning and achievement outcomes by subpopulation. When an institution identifies a performance gap, it must provide resources to address and fix the gap and must evaluate the effectiveness of its strategies to do so.

Standards that focus on equity are important because they require that institutions go beyond overall performance indicators to ensure that they are serving all students well. Without a coordinated effort across agencies, however, colleges can miss some of the important gaps that occur within and across institutions.

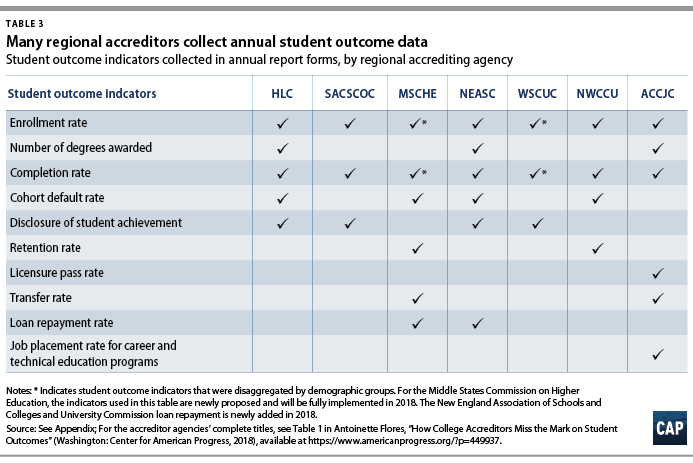

Regional accreditors collect student outcome data but don’t connect them to standards

A review of required annual reporting forms shows that regional accreditors already collect data on numerous student outcome indicators each year. (see Appendix) This undercuts the common assumption and criticism of accreditors that they don’t measure performance on even the most basic outcomes such as graduation rates. Table 3 shows which student outcome indicators are collected by each regional agency in annually filed forms.

All agencies collect annual data on enrollment and completion.31 Four agencies collect cohort default rate data—the percentage of a school’s borrowers that enters repayment and defaults within three years. Three agencies check that outcome data are publicly disclosed on colleges’ websites. Beyond these indicators, three agencies collect more information related to federal financial aid. HLC collects the percentage of Pell Grant recipients and the average Pell Grant received, which gives an indication of the college’s low-income student enrollment and overall financial aid need.32 In addition, both NEASC and MSCHE recently began to collect student loan repayment rate data.33

While these data collections are important, they are not well-connected to student outcome standards. For example, HLC standards require institutions to focus on persistence, retention, completion, and success after graduation34, but it does not collect data on these measures annually.35 NWCCU collects data on completion, default, and retention, but does not have specific standards related to these outcomes.36 It is not clear how NEASC and MSCHE will use student loan repayment rate data, but as of now, the collection is not directly related to standards.37

Without linking long-term data collection to standards, accreditors are unable to hold colleges accountable for what the data may show. Accreditors can only take action against an institution if it violates one or more of their standards. Therefore, how colleges perform on these measures does not directly factor into whether or not it should be accredited.

As part of its annual report, HLC has begun flagging colleges with weak graduation and persistence rates compared with their peers for follow-up.38 The agency flags institutions that fall in the top percentages of the institutions’ peers for further follow-up. Peers are defined as two-year small or large institutions or four-year small or large institutions. It is unclear what the follow-up consists of; how many institutions fall within this category; or whether the follow-up leads to further action.

ACCJC provides a good example of how to better connect annual data collection to standards. The agency’s standards require institutions to set goals for specific outcomes, and its annual review form requires colleges to list each of its institution-set standards as well as its actual performance.39 As a result, the agency can keep track of what colleges say they should achieve as a minimum benchmark, and then compare it with the colleges’ actual performance.40

Annual data collection processes mean that regional accreditors already have much of the necessary information and infrastructure in place to enhance their focus on student outcomes. But just because accreditors have the data does not mean they will use it to drive accountability. Accreditors need to decide what level of performance is adequate and what actions they will take when an institution is not up to par.

Aligning annual reports and standards would allow accreditors to set strong student outcome standards and collect data annually to hold institutions accountable. Without this connection and a history of data, accreditor standards fall short of actual accountability.

Without clear minimum standards, evaluating performance gets lost in the shuffle

A review of self-studies and accreditor team reports shows that even when an institution is reporting data, its actual performance on those measures can still be overlooked. As a result, accreditors and institutions may produce a great deal of documentation about how schools use and report data while never discussing what the data actually demonstrate in terms of results. A few examples show how this phenomenon plays out in practice.

Self-studies and site visit team reports can gloss over a long list of student outcome measures to prioritize improvement rather than accountability. NEASC, for example, requires institutions to include Data First forms as the basis of their self-study.41 These forms include data on a wide range of institutional characteristics and student outcomes such as student debt levels and rates of enrollment, retention, graduation, licensure passage, job placement, and default over several years. NEASC standards require that “[t]he institution demonstrates its effectiveness by ensuring satisfactory levels of student achievement on mission-appropriate student outcomes.”42 Standards, however, do not define what level of performance is satisfactory. Without a clear definition, a college can meet a standard—even when, by its own admission, its performance is unsatisfactory.

Consider, for example, the self-study of Johnson State College (JSC), a small, public four-year college in Vermont serving 1,500 students.43 As part of its 2016 NEASC self-study, the college reported a four-year graduation rate of between 15 and 17 percent and a six-year graduation rate of 35 percent. The college acknowledged that these rates are “low” and “daunting.”44 While it has taken steps to improve, such as instituting early warning mechanisms and piloting tools for identifying at-risk students, the school’s self-study admitted that this approach did not work and that a new plan is needed. JSC set a goal for the following year to increase the graduation rate to 27 percent, noting that it will continue to work on a strategy to improve the college’s low graduation and retention rates.45

The NEASC site visit team report acknowledged JSC’s low performance but found its focus on improvement satisfactory.46 It first mentioned the graduation and retention rates the college provided and noted that they were one set of indicators the college used to monitor effectiveness. It then acknowledged that these rates were lower than other Vermont colleges’, but that JSC recognized its need for improvement. The report stated that the college had considerably higher graduation rates for Pell Grant students and first-generation college students. Furthermore, the report highlighted a promising initiative the college instituted—themed first-year learning communities—which were slowly improving first-year retention rates. At the end of the report, the visiting team listed the college’s strengths and weaknesses. Among its strengths were JSC’s commitment to student success and its investment in the first-year experience, both of which have improved retention. The site visit team did not mention anything related to student outcomes in its list of JSC’s weaknesses, nor did it have any recommendations on how the school could improve.47 In other words, according to the visiting team report, JSC’s efforts to improve its persistently low graduation rates were sufficient, despite that there had been little measurable improvement.

Standards’ specificity does not lead to greater focus on actual performance

Whether or not an accreditor’s standards include specific student outcome measures that must be examined does not appear to create a greater focus on performance. SACSCOC, HLC, and ACCJC’s standards on student outcomes vary considerably in terms of their specificity. ACCJC requires specific, institution-set performance goals; HLC requires a focus on particular outcomes; and SACSCOC leaves performance up to institutions. Examples from each agency, however, show that all suffer from similar shortcomings.

Southern West Virginia Community and Technical College (SWVCTC) serves roughly 1,700 students and is accredited by HLC.48 In its self-study, SWVCTC described its process of collecting and reporting data; mentioned its goals for improving the number of graduates; and detailed how it plans to do so, such as by revising developmental education. Nowhere in the self-study did the college assess its actual performance or improvement since its last review.49 The HLC site team report also never discussed actual performance. It stated that the SWVCTC met the standard because it collected and reported data and had goals for improvement.50

The SACSCOC review of the University of South Carolina (USC) Upstate contained similar issues. USC Upstate is a public four-year college serving just less than 6,000 students.51 In its own evaluation, the school provided detailed data on student outcomes, including retention and graduation rates, course completion, licensing examination results, and job placement.52 The SACSCOC’s final site visit report stated that USC Upstate regularly reports data and discusses its process for reporting data, but contained no analysis of the school’s actual performance. The institution has a six-year graduation rate of 38 percent.53

MiraCosta College is a public community college in California serving almost 15,000 students. It is accredited by ACCJC.54 As part of its 2016 self-study, the college set its own goals for performance on indicators including the number of transfers to four-year colleges, persistence rates, and the number of degrees and certificates awarded in addition to required categories such as course completion and licensure pass rates. It also included detailed data on employment rates and program completion disaggregated by various student demographics.55 ACCJC’s site team report found the college in compliance because it had set standards, assessed them, and used results to lead improvement efforts. It did not focus on the college’s actual performance on these indicators.56

While ACCJC’s approach is valuable and data-focused, it glosses over student outcomes without judging the college’s performance or need for improvement. It allows low-performing colleges to meet the standards as long as they set goals, strive to achieve them, and implement improvements.

The gap between data collection and data use among regional accreditors has significant implications for students. Without a baseline standard for performance or improvement, accreditors likely end up measuring institutions’ intentions and processes—not the actual results they achieved.

National accreditors focus on accountability of student outcome measures

In contrast to regional accreditors, the four national accreditation agencies have clear, quantifiable standards on student outcomes. These agencies’ standards require institutions to meet minimum bars of performance each year based on various measures. Three of the 4 agencies require institutions to report outcomes at the program level. The one exception, NACCAS, primarily oversees cosmetology schools, so although it doesn’t explicitly measure program-level outcomes, the colleges it oversees are typically singular programs.57 Monitoring performance at the program level allows accreditors to further review or sanction individual programs rather than limit their examination to the institution as a whole.

Although all national agencies consider student outcome data, what they look for and the benchmarks they set still vary a great deal. (see Figure 4) ACCSC, NACCAS, and COE, for example, each have minimum bars of performance on graduation, job placement, and licensure pass rates, while ACCET only requires performance benchmarks for graduation and job placement rates.58 ACCSC requires programs to have a minimum completion rate that varies from as low as 40 percent for programs longer than 2 years to 84 percent for certificate and training programs that are 1-3 months long .59 The three other national agencies have flat rates for graduation that vary from as low as 50 percent at NACCAS to 67 percent at COE.60

While clear performance benchmarks ensure a minimum bar of quality that institutions must meet, they are only worthwhile if the thresholds are ambitious and catch the worst performers. Variation in performance benchmarks means that institutional quality and the likelihood of success potentially depend on which accreditor oversees the school students attend.

It also matters how an agency sets its benchmarks. ACCSC, for example, sets its thresholds one standard deviation below the average performance among its institutions.61 This assumes that average performance is adequate. But if an established benchmark is set low enough that an overwhelming majority of institutions or programs pass, it does nothing to encourage improvement.

Performance benchmarks don’t guarantee that poor outcomes will be fixed

Performance benchmarks set a clear line of what outcomes are acceptable, but the actions an agency takes when a college is underperforming can undermine accountability.

When a college underperforms, some agencies take a hard line, while others allow institutions flexibility in explaining their performance. For instance, programs that fail COE’s benchmark are placed on warning and have one to two years to bring the program into compliance or be placed on probation.62 Depending on how far below the threshold a program falls, ACCET may require additional reporting or issue a programmatic probation.63 For example, if a college’s job placement or completion rate falls between 56 percent and 69.9 percent, it is required to submit a report that includes a management plan for improving rates and a proposed timeframe for coming into compliance. Schools with performance rates that fall below 56 percent are placed on programmatic probation and are required to submit a report and detailed analysis. The accrediting agency may give institutions with low completion rates a reprieve if they have placement rates above the benchmark rate in that program.*

In another example, ACCSC can take a range of actions such as placing a program on heightened monitoring, warning, or probation; freezing enrollment; and revoking a program altogether. It also gives institutions the chance to demonstrate successful achievement by showing evidence of student learning or external factors—such as economic conditions, the student population it serves, or state and national trends—that might explain their inability to meet the benchmarks.64 In November 2012, for example, ACCSC first acted to limit program enrollment at one of its schools with systemic student achievement problems.65 These problems were widespread and included below benchmark performance in student outcomes at 9 of the school’s 10 programs. The problems continued over the next five years, and although ACCSC took many actions—including warnings, probation, show cause, and eventually revoking approval of baccalaureate programs—the college remains accredited and on warning.66

The procedures described above show that institutions are given considerable leeway and can remain accredited—even if they don’t meet minimum performance benchmarks. How often accreditors accept alternative responses or measures—as well as how long colleges remain accredited despite poor performance—is unclear, since information on when a college underperforms and the accreditors’ decision process is typically not public. Without greater transparency on outcomes and sanctions, it is difficult to determine the effectiveness of these policies.

Annual reporting among national agencies focuses on compliance

Unlike regional agencies, national accreditors connect student outcomes directly to their standards and annual reporting requirements. Figure 5 shows that all four agencies track outcomes based on the performance benchmarks required in the agency standards. Collecting this information in annual reports ensures that colleges are meeting performance benchmarks each year—not just once every several years.

National agencies are also more likely to require institutions to report annually on red flags, such as whether or not the college is under investigation. Figure 5 shows what accreditors require institutions to include in their annual report. Three of the 4 national agencies ask institutions whether they have had any kind of negative audit at the state and federal level. Both ACCSC and ACCET require institutions to report on actions taken by other accreditors, complaints, and investigations.

In contrast, only two regional agencies ask about whether institutions were subject to adverse actions by another accreditor. This does not mean that regionals do not check this information; many require that institutions report any kind of negative action when it happens. HLC, for example, requires institutions to notify the agency when it receives an adverse action from another accrediting agency or if a state issues an action against the institution.67 They do not ask institutions to provide this information in annual reporting. SACSCOC, however, asks about federal audits and actions by other accreditors in the interim report schools complete five years into their accreditation cycle.68

Part of why national accreditors are more likely to include compliance on annual reporting might have to do with the types of institutions these agencies oversee. National agencies mostly oversee for-profit colleges that historically have had more troublesome track records with cases of fraud than have public and private nonprofit colleges. Explicitly asking this information every year ensures that problematic institutions don’t slip through the cracks.

Recommendations for accrediting agencies

As gatekeepers to federal student aid, accrediting agencies have a responsibility to ensure that institutions are high-quality in order to protect both students and taxpayers. CAP’s review shows that, particularly among regional agencies, accreditors’ lack of clear standards for student outcomes means that institutional performance is not sufficiently emphasized. There is no minimum student outcome result institutions must meet in order to be accredited, nor does low observed performance consistently trigger further examination. The result is that accredited institutions can easily fail their students. National agencies’ clear performance standards and annual data collection processes do more to hold colleges accountable, but agencies are inconsistent and, in many cases, soft on enforcement.

Fortunately, CAP’s review also shows that accreditors have an annual data collection process in place and already possess some of the data required to enhance their focus on outcomes. In order to fix these problems, there must be a stronger tie between data collection and standards. Below are recommendations for how accreditors can improve and hold colleges accountable.

Require collection and analysis of common student outcome data across accreditors

Variation in accreditors’ measurements and in established bars of performance undermines agencies’ goals of quality and accountability. Under the current system, an institution overseen by one agency must report one set of data, and an institution overseen by a different agency reports another set of data. The variation in what data is collected makes evaluating overall performance and drawing comparisons across institutions difficult.

Accreditors should agree upon what student outcome data to collect as a standard measure of quality across all agencies. Similar to the work done as part of the C-RAC pilot, both regional and national agencies should decide together what data to collect and how to report it. This may require measures to differ based on institution type or mission, because outcomes for career-focused institutions or programs may not necessarily be the same as outcomes for liberal arts-focused institutions. Accreditors may also agree that community colleges and four-year colleges should be judged based on different measures. Furthermore, accreditors need multiple indicators that capture how students fare while they are enrolled—such as retention and completion rates—and after they’ve left—such as job placement rates and student loan repayment.

Accreditors should also create a definition of each measure so that agencies can collect data in the same way. For example, while each of the national agencies consistently collect data on completion, job placement, and licensure pass rates, their definitions of each measure varies. Variation makes it difficult to compare performance across institutions. Regional agencies have all attempted to place a greater emphasis on completion rates through the C-RAC study but all found that the federal graduation rate does not accurately depict outcomes consistently across colleges.69 Regional agencies can and should come up with their own measures to best capture student outcomes.

Include equity in data collection

Measuring institutions’ overall performance is not enough. Accreditors should require institutions to disaggregate performance data by demographic groups including, at least, race, income, and gender—and ideally attendance status and students requiring developmental education as well. These measures are important to ensure institutions are achieving equitable outcomes for all students. Only two agencies require colleges to look at outcomes by demographics—such as race and income—to evaluate performance. WSCUC and ACCJC’s standards that require colleges to disaggregate data represent great starting points for other agencies.70 However, even these standards—which allow colleges to choose which measures and demographics they disaggregate—leave too much up to each college’s discretion.

Better connect standards and annual data collection through clear performance expectations

Accreditors already have the foundations of an annual reporting process and years of student outcome data in place to enhance their reviews, but it’s not always clear how this information is factored into accreditation reviews and decisions. Agencies should revise their standards to articulate clear performance expectations. This does not necessarily mean accreditors should have a bright-line standard that automatically leads to a loss of federal financial aid when a school falls short. Instead, accreditors could create a standard that automatically triggers an in-depth review or a shortened accreditation cycle. Benchmarks should be established for measures beyond completion rates, such as loan default and repayment. Performance benchmarks can be designed to accomplish multiple goals by focusing attention on the lowest performers while also setting improvement goals for all institutions.

Accreditors should ensure that annually collected data feed into what schools will be measured against and need to discuss in their self-studies. This connection to standards also must ensure that accreditor reviews touch on the observed performance level—not just on the process and use of data. Connecting these processes ensures that when a college is judged against the standards, the judgment is grounded in years of performance data. It also guarantees that colleges are held accountable for a basic level of performance and improvement every year—not just when it is reaccredited.

Establish processes for data collection and accountability for low-performing colleges

Establishing expectations for student outcomes that are connected to standards must be paired with instituting clearer processes for actions agencies should take if an institution’s student outcomes fail to meet a benchmark performance level. Accreditors must agree on what happens to colleges that fall below established benchmarks and define a consistent timeline for colleges to show improvement. Measuring student outcomes and establishing benchmarks is meaningless without accountability for institutions that consistently fail to perform and fail to improve. Action must go beyond interim reporting, shortened accreditation cycles, and special visits—tactics accreditors sometimes use when colleges show serious problems.

Support a federal student-level data system

Creating a federal student-level data system (SLDS) would make it much easier for accreditors to collect and use disaggregated student outcome data. An SLDS would allow all institutions to report detailed data at the individual student level to the federal government rather than at the institution-wide level. Colleges currently report dozens of data elements ranging from enrollment and graduation rates to revenue and spending under federal law.71 They then provide substantial data to accreditors that may or may not line up with required federal reporting. This setup presents two main challenges. First, current data systems may not produce indicators that are useful for institutions or accreditors. Until October 2017, the federal graduation rate only included first-time, full-time students, excluding those who transferred or enrolled as part-time students.72 Accreditors and institutions criticized this measure as a poor judge of institutional performance, which has weakened their ability to use it.73 Second, the need to report data to both the federal government and accreditors creates a greater burden for institutions. A federal student-level data system would capture more accurate and complete student outcome data and decrease both the burden on colleges reporting data and the need for low-resourced accreditors to actively collect it.

Recommendations for Congress

Congress has the power to make legislative changes that would require accreditors to focus more on outcomes in a clear and consistent way. To do this, Congress should take the following steps.

Require accreditors to have benchmark standards on defined student outcomes

Current legislation requires accreditors to have standards on student achievement but does not define student achievement, which leaves it open to interpretation. It also gives colleges the authority to define success based on their institutional mission, so that successful student achievement can vary by individual school. While some variation based on unique institutional missions is important, there should be a baseline level of student outcome performance required in order for colleges to access federal dollars. Legislation should define which student outcomes accreditors should have standards on, such as graduation rates, student loan repayment, and default. These standards should clearly define the adequate level of performance as well as the agency’s process when performance is not up to par. Federal reviews of accreditors should consider variation in the standards accreditors set to ensure that all are using best practices. National agencies, for example, set performance benchmarks that vary widely, and federal reviews only consider whether the agency has a standard—not whether the standard is adequate. Accreditors should be held accountable to ensure that their standards are adequate and in line with other agencies’.

Create loan repayment rate and default minimums

Policymakers should further bolster and support accreditors’ efforts by creating performance benchmarks on outcomes specifically related to federal aid—such as student loan repayment rates—and strengthen the cohort default rate already required.74 Today, colleges are required to report cohort default rates—a measure of the percentage of student loan borrowers who default within three years after entering repayment. While important, the measure rarely results in colleges being sanctioned for poor performance.75 The cohort default rate should be strengthened, and using repayment rates may help solve some of the problems with the measure.76

Conclusion

As gatekeepers to federal aid dollars, accrediting agencies must ensure that colleges are providing a quality education and that America’s higher education system is truly a generator of social and economic mobility. This report shows that, for the majority of accreditors, student outcomes are not a main priority and equity is mostly an afterthought. While the recent effort of C-RAC was a critical first step to addressing poor student outcomes, it does not amount to accountability. Fortunately, there is a path to fixing this problem. Actually doing so, however, will require building performance on student outcomes into accreditor standards. The lack of true accountability means accreditors avoid engaging with the poorest outcomes and fail to help institutions improve how well they serve students in a tangible way.

For regional accreditors, change means implementing minimum performance indicators that trigger meaningful action and require improvement. For national accreditors, improvement means ensuring benchmarks are strong and taking action against low-performing colleges instead of giving them additional chances. The opportunity to ensure strong student outcomes in postsecondary education exists—accreditors just have to be willing to take it.

*Correction, May 7: This report was updated to reflect that ACCET commissioners may make an exception for underperformance if a program has a low completion rate but a higher-than-average job placement rate.

About the author

Antoinette Flores is an associate director for Postsecondary Education Policy at the Center for American Progress. Her work focuses on improving quality assurance, access, and affordability in higher education.

Acknowledgments

The author would like to thank Mary Ellen Petrisko, former president of WSCUC, and Dr. Susan Phillips, professor at the State University at Albany and former chair and current member of the National Advisory Committee on Institutional Quality and Integrity, for providing expert feedback.